With the rise of ChatGPT, many jobs have become increasingly reliant on it—whether it’s preparing a meeting summary, solving technical or financial queries, or performing mathematical calculations. While Generative AI (GenAI) chatbots like OpenAI’s GPT-4 can provide impressive answers, have you ever considered how your business could leverage GenAI with your own proprietary data?

Imagine integrating a GenAI model with your organization’s specific data sources, enabling it to search and generate insights based on your unique datasets. This approach empowers your business to utilize intelligent models, such as OpenAI’s GPT-4, while ensuring the chatbot is informed by your company’s products, services, and internal knowledge base.

For example, when querying the chatbot about your products or services—or analyzing and reporting specific data—the AI can provide precise and contextually relevant answers. This not only enhances decision-making but also unlocks the full potential of GenAI tailored to your organization’s needs.

How Can Businesses Enhance Chatbot Precision with Their Own Data?

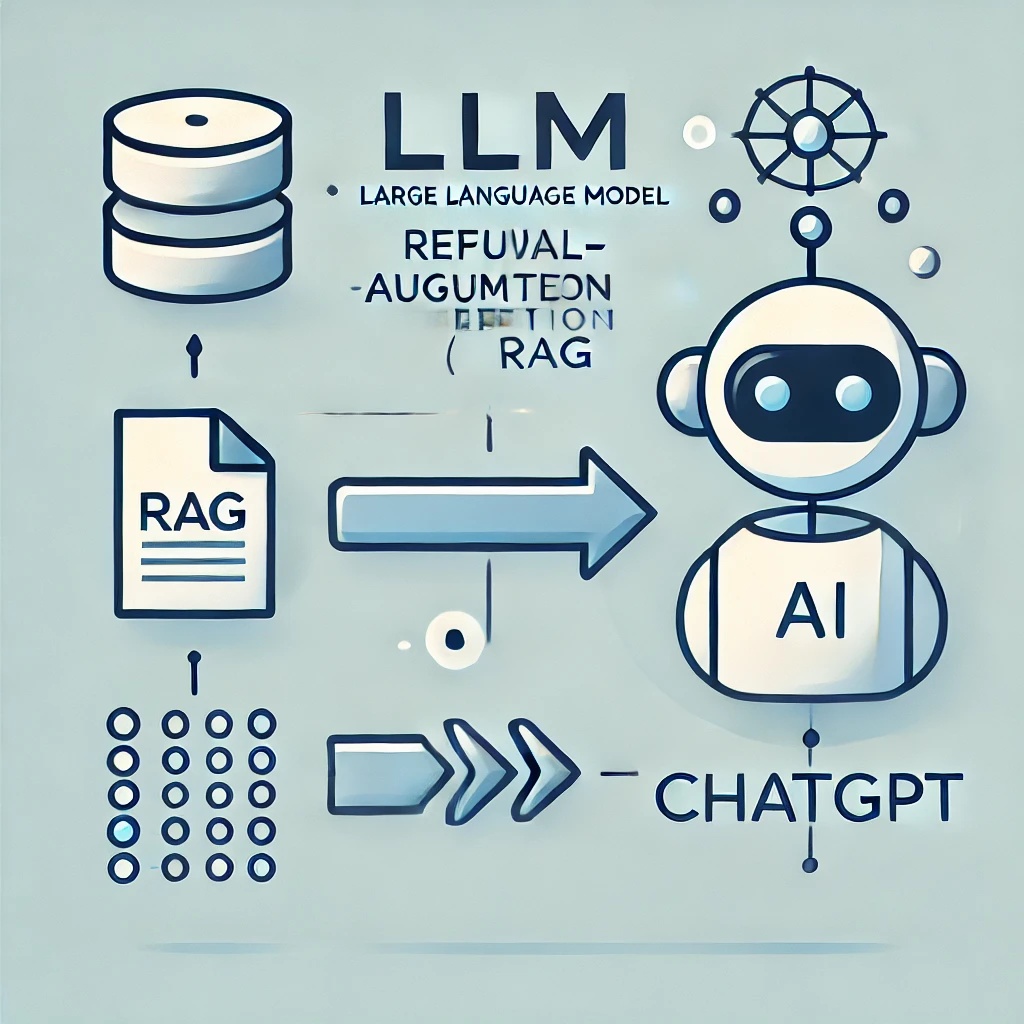

Retrieval Augmented Generation (RAG) is a powerful technique for enhancing the capabilities of Large Language Models (LLMs). By integrating your organization’s knowledge base, we can inject specific data into the LLM before processing prompts (questions). This allows the chatbot to deliver precise, contextually relevant answers tailored to your business needs.

Example of How This Can Help Businesses

In the Software Industry:

The following demonstrates how integrating data into AI systems can provide significant value. The image below illustrates the data resource (API documentation) that needs to be integrated into the LLM.

In my example, I uploaded a complex API documentation and asked the chatbot what parameters the Traversal endpoint accepts. The chatbot provided the following response:

The Traversal endpoint accepts the following parameters:

- offset: integer (optional)

- limit: integer (optional)

- min_depth: integer (optional)

- max_depth: integer (optional)

I provided the following prompt to the RAG-optimized LLM:

“write code in python that uses traversal pagination endpoint with the parameters it explained in the documentation”

The LLM responded with the following Python code example:

Here is an example of how you can make a request using the traversal pagination endpoint in Python:

import requests

import requests

url = 'https://api.example.com/v1/traversal/:id'

headers = {'Accept': 'application/json'}

params = { 'offset': 0, 'limit': 50, 'min_depth': 0, 'max_depth': 5, 'qualifier': 'eq' }

response = requests.get(url, headers=headers, params=params)

if response.status_code == 200:

data = response.json() # Process the data as needed print(data)

else:

print('Error: Failed to retrieve data from the traversal endpoint')In this code snippet, we are sending a GET request to the traversal endpoint with the specified parameters and headers. If the response status code is 200 (indicating success), we print out the retrieved data. Otherwise, we print an error message.